Head movements are commonly interpreted as a vehicle of interpersonal communication. For example, in daily life, humanbeings observe head movements as the expression of agreement or disagreement in a conversation,or even as a sign of confusion. Gaze direction can also be used for directing a person to observe a specific location. As artificial cognitive systems with social capabilities become more and more important due to the recent evolution of robotics towards applications where complex and humanlike interactions are needed, gaze and head pose estimates become an important feature.

During the CASIR project we developed a robust solution based on a probabilistic approach that inherently deals with the uncertainty of sensor models, but also and in particular with uncertainty arising from distance, incomplete data and scene dynamics. This solution comprises a hierarchical formulation in the form of a mixture model that loosely follows how geometrical cues provided by facial features are believed to be used by the human perceptual system for gaze estimation.

To evaluate our solution, a set of experiments were conducted so as to thoroughly and quantitatively evaluate the performance of the hierarchy. The experiments were devised to test system performance for many different combinations of head poses and eye gaze directions, but also for different distances in a dynamic setting context. Eleven subjects (3 female and 8 male, 8 with white complexion and 3 with dark skin colour), with ages ranging from 8 to 43 and heights ranging from 1.3m to 1.89m, were instructed to fixate a target placed in a position with known 3D coordinates throughout each trial.

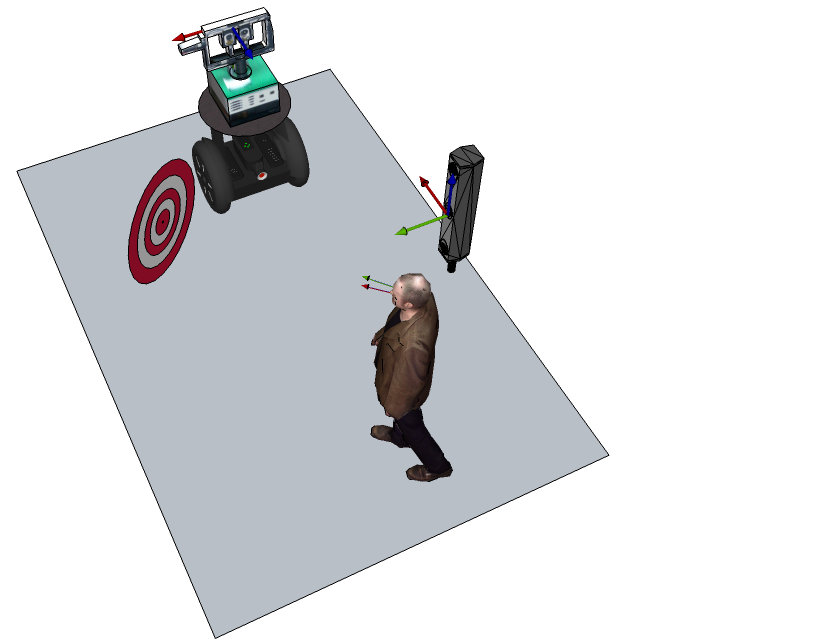

Experimental setup. In (a), the experimental plan and protocol are presented, showing the sensor rig mounted on a robotic head, the Optotrak Certus used to obtain 3D ground truth and the gaze target. In (b), a photo of the actual experimental setup and the Kinect 3D sensor is shown – note that, as opposed to the schematic in (a), the targets (three coloured stripes, respectively at 1.43m, 1.57m and 1.68m from the ground) are on the same side as the Optotrak. This is because the Optotrak can only track its optical devices within a “cone of visibility” (markings on the floor show the cone’s limits), therefore constraining the usable space available for the experiments to take place. The subjects were asked to travel from a predefined distance at about 3m towards about 1.5m from the Kinect sensor, following a relatively free path within that usable area always keeping their gaze fixed on one of the targets throughout each trial. They were also instructed to stop in three different midway positions along the path, and at those positions slowly turn their head up, down, left and right, without losing their gaze focus on the designated target.

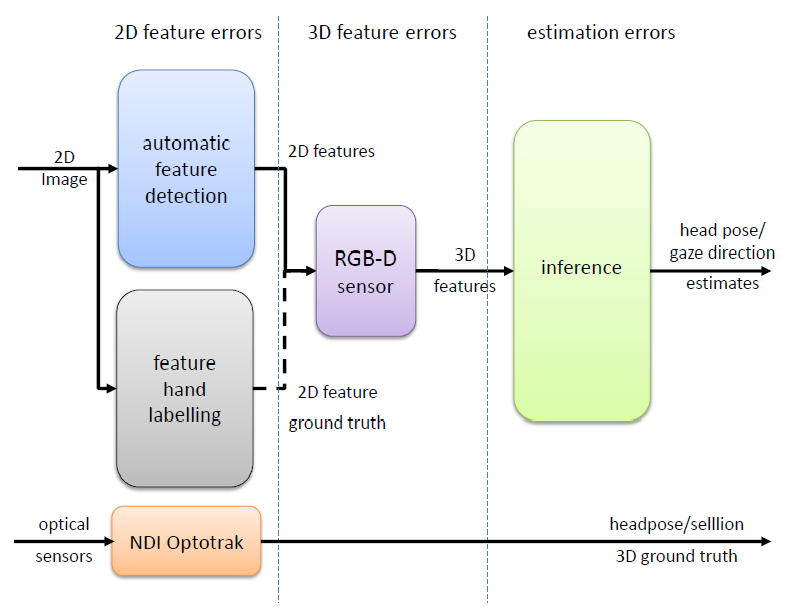

A Northern Digital Inc. (NDI) Optotrak Certus motion capture system was used to obtain 3D ground truth pertaining the position of the subjects and their head pose throughout the experiment, which was calibrated with the Kinect for registration. The data processing flow is shown below:

The database is composed of 9 different data types, stored using ZIP compression.

Data Type

RGB images – Stored on a folder in .png format.

Depth images – Stored on a folder in .pcd format (point cloud data).

Gaze vectoy – "Ground truth" 5D gaze vector (sellion position,phi,theta_head) in .txt format.

Sellion position – "Ground truth" head center 3D position (x, y, z) in .txt format.

Fixation point – "Ground truth" point of fixation 3D position (x, y, z) in .txt format.

Phi angle – "Yaw" gaze vector orientation in .txt format.

Theta angle – "Pitch" gaze vector orientation in .txt format.

2D feature positions – "Hand labeled" 2D facial features in .txt format.

3D feature positions – "Hand labeled" 3D facial features in .txt format.

Experimental setup – Transformation matrix, rotation matrix and the translation vector used in each session in .txt format.